Virtual production with LED screens will eventually become the gold standard for broadcast and cinematic production. This amazing new technology has certainly shown its advantages during the pandemic, as it cuts the need for travel, allowing for an entire production to be shot under one roof.

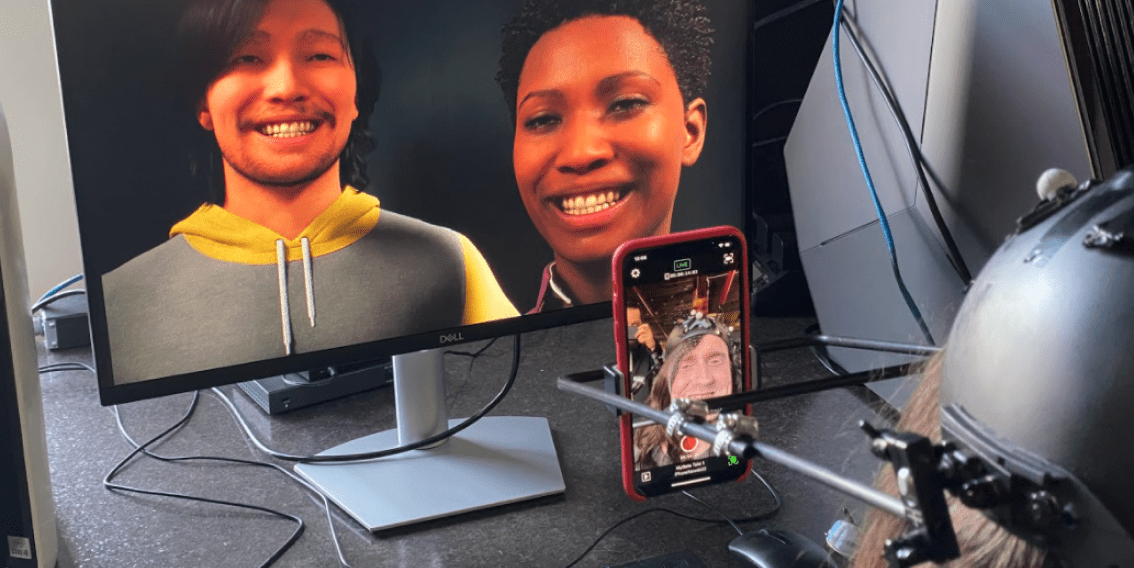

Auparavant, la création d'un seul humain numérique demandait beaucoup de travail et de compétences. De la phase de recherche et de numérisation à la modélisation 3D de la géométrie du visage, un studio professionnel avait besoin d'un mois ou deux pour y parvenir.

MetaHuman Creator, lancé par Epic Games, change tout.

Sans aucune connaissance de la technologie, des vecteurs 3D, de l'art ou de la physiologie humaine, n'importe qui peut désormais créer un humain numérique photoréaliste entièrement gréé, coiffé et habillé en moins de deux heures, voire quelques minutes.

"La version de démonstration a l'air très bonne et nous sommes très enthousiastes", explique Samy Lamouti, directeur technique de Neweb Labs. "Désormais, nous pouvons créer rapidement le personnage numérique, ou donner au directeur artistique d'une production un contrôle total sur l'apparence du personnage - il peut construire le personnage lui-même en utilisant un iPad depuis le canapé de son salon. C'est une étape qui, auparavant, coûtait beaucoup d'argent et prenait beaucoup de temps."

"Cela accélère le temps nécessaire pour amener le personnage dans notre laboratoire où nous lui donnons vie."

MetaHuman Creator est une application cloud qui permet de créer des personnages humanoïdes 3D photoréalistes de manière intuitive, avec des outils conviviaux. En partant d'un humain prédéfini de la bibliothèque existante, vous pouvez modifier le nez, la forme du visage, les sourcils, etc. Vous pouvez également choisir parmi une trentaine de coiffures qui utilisent les cheveux à base de mèches de l'Unreal Engine, ou des cartes de cheveux pour les plates-formes bas de gamme. Vous pouvez également choisir parmi une trentaine de coiffures basées sur les mèches de l'Unreal Engine ou sur des cartes de coiffure pour les plates-formes bas de gamme.

Lorsque vous êtes satisfait de la conception de votre personnage, vous pouvez le télécharger via Quixel Bridge, entièrement truqué et prêt pour l'animation et la capture de mouvement dans Unreal Engine, avec tous les LOD. Vous obtiendrez également les données sources sous la forme d'un fichier Maya, comprenant les maillages, le squelette, le rig facial, les contrôles d'animation et les matériaux.

Créer et animer plus rapidement les ressources des personnages numériques

Lors d'une récente discussion sur la chaîne YouTube Pulse d'Unreal Engine, Jerome Chen, de Sony Pictures Imageworks, a décrit sa première expérience de travail avec le nouvel outil.

Sa prochaine production nécessitait six personnages numériques, et il craignait qu'ils ne puissent être réalisés avec le budget et les ressources dont il disposait. "C'est une perspective terrifiante chaque fois que vous devez créer un personnage - vous ne savez jamais si cela va fonctionner ou non". Il savait qu'en utilisant les techniques traditionnelles, "nous n'avions pas les ressources nécessaires pour en faire six." Il a contacté Epic pour demander un accès anticipé au MetaHuman Creator.

Il n'avait aucune idée que ce serait si efficace. "Je pensais que cela prendrait des semaines", explique Chen. Mais lors d'une session de partage d'écran d'une heure et demie sur Zoom en collaboration avec Vladmir Mastilović de 3D Lateral (qui fait désormais partie d'Epic Games), il est reparti avec une version numérique de l'un des six acteurs avec lesquels il travaillait. "Nous étions vraiment reconnaissants d'avoir un accès à cela", a-t-il déclaré.

"La beauté du système est que le rigging est déjà fait", a-t-il expliqué. Metahuman Creator fonctionne sur une énorme base de données d'informations provenant de personnes réelles qui ont été scannées. Des compensations par rapport à des modèles universels sont utilisées, ce qui confère au processus une certaine normalisation.

Auparavant, l'importation d'un logiciel à un autre nécessitait une réflexion constante, des compromis et des corrections, et il fallait prévoir du temps pour corriger les problèmes, comme les artefacts. "Maintenant, tout est dans le même univers", explique Lamouti. "Avec cet outil, avec un personnage prêt à partir, de notre côté, nous apportons notre expertise en matière de capture de mouvement, de performance et d'animation pour mettre la production en mouvement. MetaHuman Creator facilite le processus en rendant les personnages plus beaux, plus performants et en les faisant passer plus rapidement au stade de la production."

La normalisation change la donne

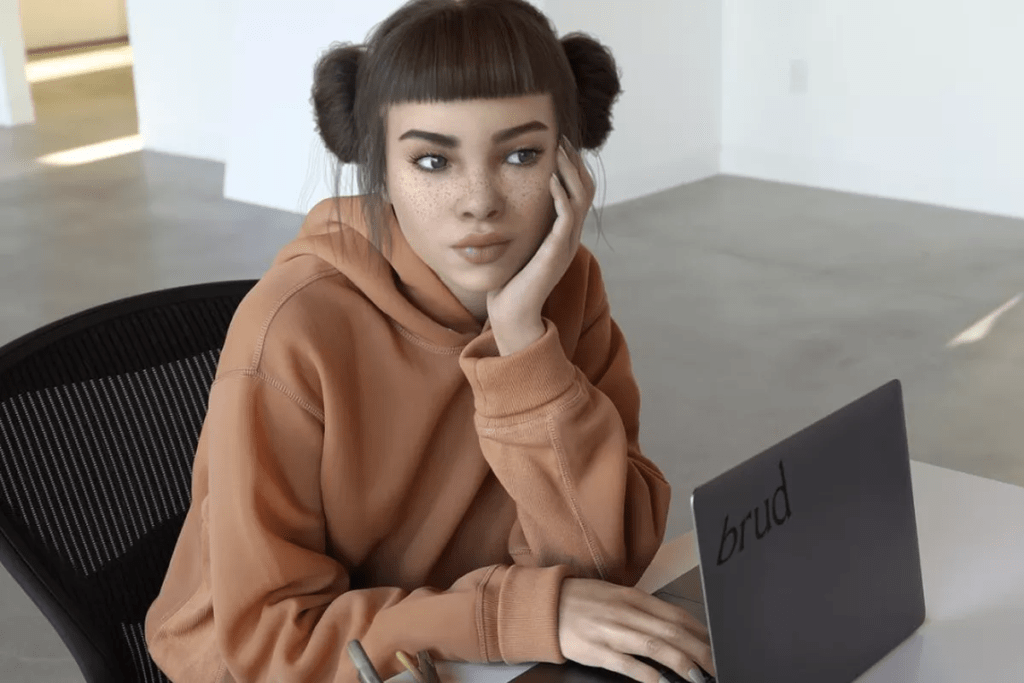

Dans le monde de la modélisation humaine numérique, "la normalisation était une étape importante que beaucoup de gens ont manquée", souligne Isaac Brazel, concepteur en chef et responsable de l'innovation chez Brud, les créateurs de L'il Miquela, un humain numérique qui compte plus de 3 millions de followers sur Instagram. Lors de la table ronde sur Pulse, il a également indiqué qu'il était particulièrement impressionné par les options de coiffure disponibles dans le logiciel. "Ils (Epic) ont pris beaucoup de soin à faire la bibliothèque de groom". Selon lui, le style d'un personnage est extrêmement important ; c'est ce qui fait d'eux ce qu'ils sont, ce qui les rend crédibles et aide le public à s'identifier à eux. "Avec Miquela... si nous ne nous étions pas appuyés sur le style pour définir qui elle est, cela n'aurait pas aussi bien fonctionné."

Amy Hennig, de Skydance Media, est d'accord. Ce sont les subtilités qui donnent vie à une personnage numérique et, en fin de compte, à une histoire. Cet outil permet d'entrer beaucoup plus facilement dans les sous-textes de la narration, a-t-elle déclaré au panel Pulse. Le "Saint Graal", c'est quand vous n'avez pas à penser à la technologie et que vous pouvez atteindre un certain niveau de subtilité au stade de l'écriture", a-t-elle expliqué.

Avant, "nous avions une carte de texture et quelques polygones - nous écrivions autour de cela". Maintenant, dit-elle, "nous pouvons avoir un niveau de fidélité dans la performance".

Par exemple, il est désormais possible d'inscrire dans un script des éléments tels que le retrait de la veste d'un personnage. Avant, ce n'était pas possible. Les personnages étaient créés avec leurs vêtements. "Il n'y avait rien en dessous." En tant que personnage numérique, "vous êtes votre veste", a-t-elle expliqué.

La technologie est un moyen de parvenir à une fin, a-t-elle dit. "La fin est une histoire immersive profondément crédible, où vous n'êtes pas arraché par des frictions".

Cela ne va certainement pas éliminer les artistes, ajoute Hennig. "Cela les libère de toutes les corvées, pour les laisser faire leur art".

Neweb Labs joue avec ce nouvel outil et se prépare à l'intégrer pour accélérer les productions à venir.

Regardez la discussion complète sur MetaHuman Creator sur Pulse, via la chaîne Youtube d'Epic.

Au cours des derniers mois, nous avons discuté avec des acteurs clés du monde du cinéma, de la télévision et de la publicité au Québec et dans les environs, et nous nous sommes rendu compte que si la plupart des gens avaient entendu parler de la production virtuelle, beaucoup ne comprenaient pas complètement ce que c'était et comment cela fonctionnait.

C'est pourquoi nous avons décidé de présenter un événement complet sur ce sujet : InFocus Virtual Production : : Perspectives de la production virtuelle. Cette conférence en ligne gratuite a eu lieu le 13 mai 2021 et a rassemblé des intervenants et des panélistes locaux et internationaux issus de l'ensemble du secteur - Dreamwall, Epic Games, Mtl Studios Grande, Ncam, Neweb Labs, Zero Density - qui ont discuté des meilleures pratiques, des cas pratiques et de la manière dont la production virtuelle peut accélérer l'ensemble du processus de création et de production.

Notre objectif principal

Notre objectif avec cette conférence est d'expliquer à la communauté des réalisateurs, producteurs et équipes techniques

- Ce qu'est la production virtuelle ;

- Les différences entre le pipeline de production traditionnel et virtuel ;

- Quelles technologies peuvent être utilisées, et quand et comment les utiliser ;

- Et enfin connaître les meilleures pratiques et les cas d'utilisation de la production virtuelle.

Alors que la production virtuelle a été rendue populaire dans des superproductions comme Avatar et plus récemment avec des émissions comme The Mandalorian (Star Wars), la bonne nouvelle est que vous n'avez plus besoin d'un budget hollywoodien pour donner vie à la production virtuelle et qu'elle peut être utilisée aussi bien pour la télévision que pour la publicité.

Revisitez l'intégralité de la conférence

1 - En savoir plus sur Neweb Labs

Video 1 :

Plus de références par Neweb Labs

2 - Une introduction rapide à la production virtuelle par Neweb Labs

Video 2 :

Plus de références par Neweb Labs

Production virtuelle, découvrez les quatre principales raisons pour lesquelles les cinéastes doivent monter à bord dès maintenant

Ré-imaginez des spectacles et des événements en direct avec la production virtuelle

Les avantages et les inconvénients des écrans LED

Des possibilités infinies avec la production virtuelle

3- David Morin, Epic Games

Production Virtuelle : vers une narration et des processus créatifs en temps réel présentation par David Morin, Epic Games.

Plus de références par Epic Games

Academy Software Foundation (ASWF)

Virtual Production Field Guide V2

Travis Scott Astronomical trailer (:30)

Halon previz reel for Ford v Ferrari

Production virtuelle sur The Mandalorian, saison 1

Production virtuelle sur Westworld, saison 3

Carne y Arena (bande-annonce d'ILMxLAB)

Unreal Build : VP full playlist

MetaHuman Creator Early Access Out Now | Unreal Engine

4 - Table ronde : L'adoption de méthodes de production virtuelle dans un climat tendu pour l'industrie du cinéma et de la télévision avec Epic Games, Dreamwall, MTL Studios Grande.

5- Atelier 1 : Création d'un studio virtuel avec Reality Engine par Onur Gulenc de Zero Density.

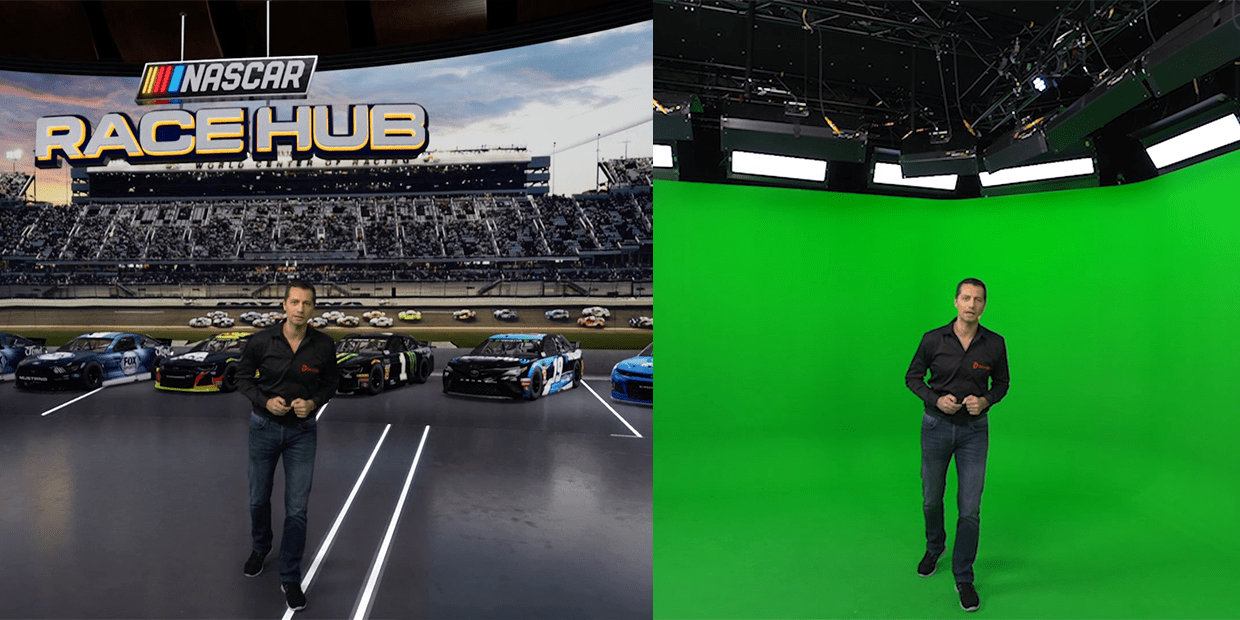

- Fox Sports' NASCAR Set is Live with Reality Virtual Studio

- ESL, Counter-Strike Go Tournament, The Netherlands, 2019

- Virtual Line Studios, Showreel, Japan, 2020

- Eurosport, Winter Olympics, The Netherlands, 2018

- Ziggo Sport, Formula 1, The Netherlands, 2018

- TRT2, Multiple Virtual Sets, Turkey, 2019

- StreamTeam, Teleportation Interview, Finland, 2019

- Virtual Line Studios, Showreel, Japan, 2020

- Nordic Entertainment Group, NFL Super Bowl, Denmark, 2020

- The Weather Channel, Immersive Mixed Reality, USA, 2020

- Reality Virtual Studio NAB Show 2019 Demo

- The Weather Channel, Immersive Mixed Reality, USA, 2020

- RTBF, Les carnets du Bourlingueur, Belgium

- SequinAR, North Pole Experience, USA, 2019

- The Weather Channel, Immersive Mixed Reality, USA, 2020

- Reality Engine Tracked Talent Demonstration

- Reality Engine | An In-depth Look at AR Compositing

- TF1, FIFA World Cup, France, 2018

- Zero Density, Reality Keyer Video, 2020

- Virtual Line Studios, Showreel, Japan, 2020

- Bundeswehr, United Armed Forces of Germany, Germany, 2020

- Riot Games, League of Legends – World Championship Finals, China, 2017

6 - Atelier 2 : L'importance des technologies de suivi et de synchronisation des caméras par Mike Rudell de Ncam.

7 - Remarques de clôture par Neweb Labs

Comment la réalité mixte ouvre-t-elle une nouvelle ère pour les créateurs de contenu ?

COMMUNIQUÉ DE PRESSE POUR DIFFUSION IMMÉDIATE

Montréal, le 20 avril 2021 - Neweb Labs a le plaisir d'annoncer la présentation d'une conférence en ligne gratuite - Perspectives Production Virtuelle - le 13 mai 2021, de 12h à 15h. L'événement portera sur le sujet brûlant qu'est la production virtuelle : l'intégration des technologies de réalité mixte sur les plateaux.

Destiné à la communauté des producteurs, réalisateurs et équipes techniques du secteur du cinéma, de la télévision et de la publicité au Canada, cet événement réunira plusieurs experts dans le domaine de la production de contenu et de la technologie : Epic Games, Zero Density, Ncam, Dreamwall, TFOLuv, Mtl Studios Grande et Neweb Labs.

Pour plus d'informations et s’inscrire

Consultez ci-dessous la liste des conférenciers et panélistes invités

- David Morin, Chef, Epic Games Los Angeles Lab

- Thibault Baras, PDG, Dreamwall

- Paul Hurteau, Directeur de la photographie, MTL Studios Grande

- Mike Ruddell, Directeur mondial du développement commercial, Ncam

- Jerry Henroteaux, Spécialiste VFX en temps réel, TFO LUV

- Onur Gulenc, Directeur du développement commercial, Zero Density

- Frederic MacDonald, Directeur du développement stratégique, Neweb Labs

- Catherine Mathys, Directrice des tendances de l'industrie et du marché, Fonds des médias du Canada

A propos de la production virtuelle

La production virtuelle est une nouvelle technique de production inspirée de l'industrie du gaming adaptée à l'industrie de la télévision et du cinéma. Elle permet aux équipes, producteurs, réalisateurs et techniciens de prévisualiser des scènes complexes d'un film avant le tournage (previs) et d'avoir un rendu en temps réel des effets visuels directement en caméra cachée sur le plateau. Grâce à un pipeline de production virtuel, les équipes techniques et créatives peuvent rationaliser l'ensemble du processus, prendre des décisions en temps réel, réduire les efforts de postproduction et diminuer les coûts de production.

A propos d'Epic Games Lab - Unreal Engine

Unreal Engine est une suite complète d'outils de développement pour tout professionnel qui souhaite travailler avec la technologie en temps réel. Des visualisations de conception et des expériences cinématiques aux jeux de haute qualité sur PC, console, mobile, VR et AR, Unreal Engine vous apporte tout ce dont vous avez besoin pour démarrer, expédier, vous développer et vous démarquer.

La production virtuelle est sur le point d'être l'un des plus grands disrupteurs technologiques du support visuel, Unreal Engine a été à l'avant-garde de la production virtuelle depuis que les premiers flux de travail ont commencé à émerger. Aujourd'hui, la production virtuelle peut influencer tous les aspects de la chaîne de production et devient rapidement une partie intégrante des chaînes de production de films, de vidéos et d'émissions, ouvrant de nouvelles portes aux innovateurs qui cherchent à se créer une place dans l'espace visuel concurrentiel. En combinant la CG, la capture de mouvement et le rendu en temps réel avec les techniques traditionnelles, les équipes de production découvrent déjà qu'elles peuvent mettre en lumière la vision du réalisateur, et ce n'est que le début.

A propos de TFO – LUV

L'innovation fait partie de l'ADN du Groupe Média TFO. Son Laboratoire d'univers virtuels (LUV) en est la plus belle preuve. Le LUV est le seul studio de ce type. Situé au cœur de Toronto, ce nouveau processus dynamique de création de contenu a été conçu par le Groupe Média TFO et ses partenaires. Cet incubateur unique pour une toute nouvelle génération de contenus combine les mondes virtuels en temps réel avec des captures en direct, utilise les dernières innovations des industries du jeu vidéo, de la télédiffusion et du divertissement. Le Laboratoire des mondes virtuels est également commercialisé afin que sa technologie de pointe puisse servir et bénéficier à l'écosystème créatif et aux productions externes.

A propos de Zero Density

Zero Density est une entreprise technologique internationale qui se consacre au développement de produits créatifs pour les industries de la radiodiffusion, de la réalité augmentée, des événements en direct et des sports électroniques. Zero Density offre le prochain niveau de production virtuelle avec des effets visuels en temps réel. Elle fournit une plateforme native d'Unreal Engine, "Reality Engine®", avec des outils de composition en temps réel avancés et sa propre technologie d'incrustation. Reality Engine® est le studio virtuel 3D en temps réel et la plateforme de réalité augmentée les plus photoréalistes du secteur.

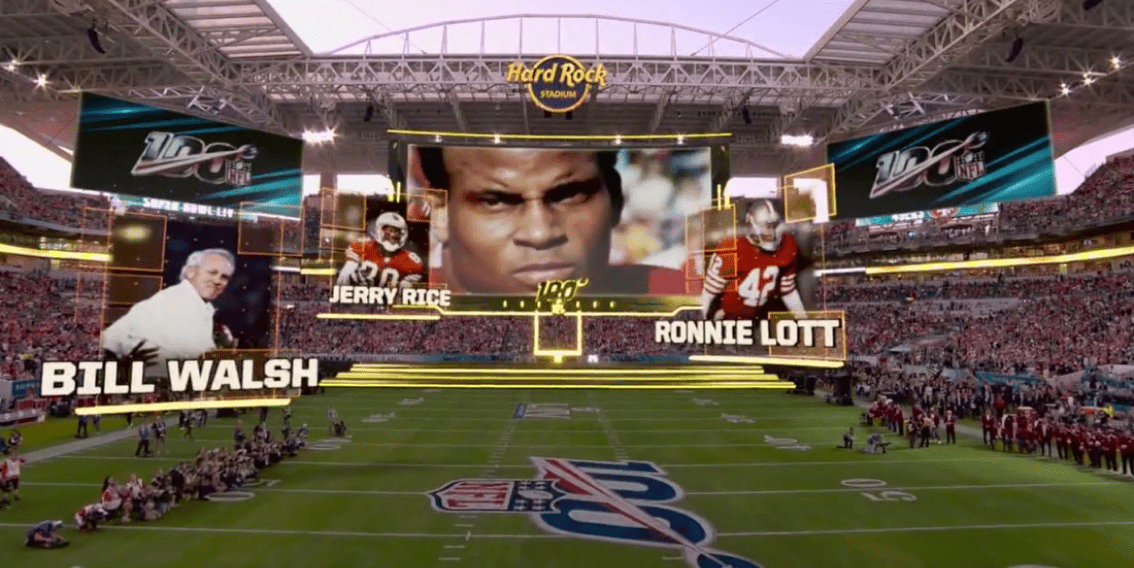

A propos de Ncam

Ncam sont les créateurs de Ncam Reality, le tracker de caméra en temps réel le plus avancé au monde. De Star Wars au Super Bowl, Ncam Reality est utilisé dans les industries de la diffusion, du cinéma et des événements en direct par certaines des plus grandes marques du monde pour visualiser des graphiques photoréalistes en temps réel. Parmi les clients figurent : Amazon, CNN, Disney, ESPN, Netflix, la NFL et Sky TV.

How mixed reality is shaping a new era for content creator

PRESS RELEASE FOR IMMEDIATE RELEASE

Montreal April 20, 2021 - Neweb Labs is pleased to announce the presentation of a free online conference - Perspectives Production Virtuelle - on May 13, 2021, from 12 pm to 3 pm. The event will cover the hot topic that is virtual production: the integration of mixed reality technologies on sets.

Aimed at the community of producers, directors and technical teams in the Canadian film, television and advertising sector, this event will bring together several experts in the field of content production and technology - Epic Games, Zero Density, Ncam, Dreamwall, TFOLuv, Mtl Studios Grande and Neweb Labs.

Get more information and register

Guest Speakers and Panelists

- David Morin, Head, Epic Games Los Angeles Lab

- Thibault Baras, CEO, Dreamwall

- Paul Hurteau, DOP, MTL Studios Grande

- Mike Ruddell, Global Director of Business Development, Ncam

- Jerry Henroteaux, Real-time VFX specialist, TFO LUV

- Onur Gulenc, Business Development Manager, Zero Density

- Frederic MacDonald, Director, Strategic Development, Neweb Labs

- Catherine Mathys, Director of Industry & Market Trends, Canada Media Fund

About virtual production

Virtual production is a new production technique inspired by the gaming industry that has been adapted in recent years to the television and film industry. It allows crews - producers, directors and technicians - to preview complex scenes in a movie before filming (previs) and to have real-time rendering of visual effects directly in-camera on set. With a virtual production pipeline, technical and creative teams can streamline the entire process, make real-time decisions, reduce post-production efforts and cut production costs.

About Epic Games Lab - Unreal Engine

Unreal Engine is a complete suite of development tools for anyone working with real-time technology. From design visualizations and cinematic experiences to high-quality games across PC, console, mobile, VR, and AR, Unreal Engine gives you everything you need to start, ship, grow, and stand out from the crowd.

With virtual production poised to be one of the biggest technology disruptors of the visual medium, Unreal Engine has been at the forefront of virtual production since the first workflows began to emerge. Today, virtual production can influence every aspect of the production pipeline and it is fast becoming an integral part of film, video, and broadcast pipelines, unlocking new doors for innovators seeking to carve out their foothold in the competitive visual space. By combining CG, motion capture, and real-time rendering with traditional techniques, production teams are already discovering they can achieve the director’s vision faster than ever before, and this is only the beginning.

About TFO - LUV

Innovation is part of Groupe Média TFO’s DNA. Its Virtual Worlds Laboratory (Laboratoires d’univers virtuels, or LUV) best demonstrates this. The LUV is the only studio of its kind. Located in the heart of Toronto, this dynamic new content creation process was designed by Groupe Média TFO and its partners. This unique incubator for a whole new generation of content combines the virtual worlds in real time with live-action captures, using the latest innovations in the videogame, television broadcasting and entertainment industries. The Virtual Worlds Laboratory is also marketed so its cutting-edge technology can serve and benefit the creative ecosystem and external productions.

About Zero Density

Zero Density is an international technology company dedicated to develop creative products for broadcasting, augmented reality, live events and e-sports industries. Zero Density offers the next level of virtual production with real time visual effects. It provides Unreal Engine native platform, “Reality Engine®”, with advanced real-time compositing tools and its proprietary keying technology. Reality Engine® is the most photo-realistic real-time 3D Virtual Studio and Augmented Reality platform in the industry.

About Ncam

Ncam are the creators of Ncam Reality, the most advanced real-time camera tracker in the world. From Star Wars to the Super Bowl, Ncam Reality is used throughout the broadcast, film and live events industries by some of the biggest brands in the world to visualize photorealistic graphics in real-time. Customers include: Amazon, CNN, Disney, ESPN, Netflix, the NFL and Sky TV.

Over the last few months, we've been speaking to key players in the film, television and advertising world in and around the province of Quebec and realized that while most people had heard of virtual production, many didn’t completely understand what it is and how it works.

That's why we decided to present a full event on this topic: InFocus Virtual Production :: Perspectives Production Virtuelle. This free online conference took place on May 13, 2021, and gathered local and international speakers and panelists from across the industry - Dreamwall, Epic Games, Mtl Studios Grande, Ncam, Neweb Labs, Zero Density - who discussed best practices, practical cases, and how virtual production can accelerate the whole creative and production process.

Our main focus

Our focus with this conference is to explain to the community of directors, producers and technical teams:

- What virtual production is

- The differences between the traditional and virtual production pipeline

- What technologies can be used, and when and how to use them

- And lastly to get to know virtual production best practices and use cases!

While virtual production was made popular in blockbusters like Avatar and more recently with shows like The Mandelorian (Star Wars), the good news is that you no longer need a Hollywood budget to bring virtual production to life and it can be used for television and advertising as well.

Revisit the full conference

1 - Learn more about Neweb Labs

More references by Neweb Labs

2 - A quick intro to virtual production by Neweb Labs

More references by Neweb Labs

- Virtual production, learn the top four reasons filmmakers need to get on board now

- Reimagining shows and live events with virtual production

- The pros and cons of LED Screens

- Infinite possibilities with virtual production

3- David morin, Epic Games

Virtual production: moving towards real-time storytelling and creative processes presentation by David Morin, Epic Games.

More references by Epic Games

- Academy Software Foundation (ASWF)

- Virtual Production Field Guide V2

- Unreal Engine 5

- Travis Scott Astronomical trailer (:30)

- Halon previz reel for Ford v Ferrari

- Virtual production on The Mandalorian, season 1 (ILM BTS video)

- Virtual production on Westworld, season 3 (HBO BTS video)

- Carne y Arena (trailer from ILMxLAB)

- Unreal Build: VP full playlist

- Meerkat sample release

- MetaHuman Creator Early Access Out Now | Unreal Engine

4 - Round Table: The adoption of virtual production methods in a tense climate for the film and television industry with Epic Games, Dreamwall, MTL Studios Grande.

5- Workshop 1: Creating a virtual studio with Reality Engine by Onur Gulenc from Zero Density.

More references by Zero Density

- Fox Sports' NASCAR Set is Live with Reality Virtual Studio

- ESL, Counter-Strike Go Tournament, The Netherlands, 2019

- Virtual Line Studios, Showreel, Japan, 2020

- Eurosport, Winter Olympics, The Netherlands, 2018

- Ziggo Sport, Formula 1, The Netherlands, 2018

- TRT2, Multiple Virtual Sets, Turkey, 2019

- StreamTeam, Teleportation Interview, Finland, 2019

- Virtual Line Studios, Showreel, Japan, 2020

- Nordic Entertainment Group, NFL Super Bowl, Denmark, 2020

- The Weather Channel, Immersive Mixed Reality, USA, 2020

- Reality Virtual Studio NAB Show 2019 Demo

- The Weather Channel, Immersive Mixed Reality, USA, 2020

- RTBF, Les carnets du Bourlingueur, Belgium

- SequinAR, North Pole Experience, USA, 2019

- The Weather Channel, Immersive Mixed Reality, USA, 2020

- Reality Engine Tracked Talent Demonstration

- Reality Engine | An In-depth Look at AR Compositing

- TF1, FIFA World Cup, France, 2018

- Zero Density, Reality Keyer Video, 2020

- Virtual Line Studios, Showreel, Japan, 2020

- Bundeswehr, United Armed Forces of Germany, Germany, 2020

- Riot Games, League of Legends – World Championship Finals, China, 2017

6 - Workshop 2: The importance of camera tracking and synchronization technologies by Mike Rudell from Ncam.

7 - Closing Remarks by Neweb Labs

MetaHuman Creator is a cloud-streamed app that enables the creation of photorealistic 3D humanoid characters intuitively, with user-friendly tools.

Virtual production is opening the doors for cutting-edge entertainment that will change live production forever. I

What is a green screen?

A green screen is an optical filter that can be used in a variety of ways.

One common use is in film and photography, where a green screen is used to block out certain colors so that they can be replaced with other colors in post-production.

This allows for a more flexible color palette and can create some interesting effects.

Green screens can also be used in live settings, such as news broadcasts or sporting events. In these cases, the green screen is used to compositing different images or video feeds together in real-time.

There are many different applications for green screens, and the technology is constantly evolving. As a result, green screens are sure to remain a staple in the world of film and television for years to come.

A green screen is an important tool for any video editor. It allows you to superimpose one video over another, which can be very helpful for creating special effects.

For example, you can use a green screen to make it look like a person is flying through the air. green screens are also frequently used in news programs, where they can be used to display weather maps or other information.

To use a green screen, you will need to have two video files - one of the green screen itself, and one of the video that you want to superimpose over it. You will then need to use video editing software to combine the two videos.

This can be a bit tricky, but there are plenty of tutorials available online that can help you get started.

How do green screens work?

Chroma key screens –commonly called green screens— have been used in film since the 1930’s for compositing (layering) two (or more) images or videos.

Chroma key compositing refers to the technique used to layer two images or videos together. Every colour has a chroma range. When a colour appears solid, it can be easily excluded from an image, to be made transparent.

When placing a chroma coloured screen –often green or blue—behind an actor, the resulting transparency allows for creative use of a second video, still image or graphic to be layered into the image-behind or in front of the actor.

This technique is commonly used to produce weather forecasts, showing a map in the background, or to place a person in front of a background to make it look like they are in a different physical space.

It can also be used to produce special effects, for example to make someone look like they are flying (by compositing a moving background behind them, while they act like they are flying) and other cinematic tricks.

The early stage of green screen

Before this technique, filmmakers used camera tricks such as double exposure to get the effects achieved by chroma key compositing. Some of the early attempts at this amazed viewers.

In his 1903 film The Great Train Robbery, Edwin S. Porter superimposed a moving train over a window in the main film image, to make it look like a train was leaving the train station.

Early films cleverly used compositing of two or more images to transport actors to imaginary locations. There is nothing more untrue than the saying “The camera never lies.”

In fact, for a hundred years of cinema, the camera has always lied. Using a combination of camera illusions to make small things look large and overlaying film exposures, filmmakers have used tricks to bring cinematic stories to life, bringing audiences into new worlds, new realities.

Green Screen techniques and innovations

1 - The Bi-pack method

First, shoot the actor on film, then, set up a camera in front of a glass painting of the desired second image, say, a big castle. Expose a new reel of film to the first film, rewind, then expose it to the second image. Voila! Now, what do you do when you want the actor to move and interact without the background bleeding through?

2 - The Williams Process

Invented by Frank Williams in 1916, this introduced using a blue screen to print a black silhouette of the actor on a pure white background, called a ‘holdout matt.” Next, Williams did reverse printing on the film to get a white silhouette against black. Next, the film could be shot using the bi-pack method (above) to composite the shot.

3 - The Dunning Method

In 1925, Dodge Dunning added yellow light to the shooting process to illuminate the actor in order to improve the process of separation of the actor from the blue screen. This was beautifully used in the 1933 production of King Kong.

4 - Sodium Screen Process

Used extensively in Disney’s original version of Mary Poppins, Wadsworth Pohl is credited with developing the sodium vapor process, also known as yellow screen, a film technique which lights up actors using powerful sodium vapor lights on a narrow color spectrum which does not register on the red, green or blue layers. This was refined by Disney’s in-house engineer, Petro Vlahos.

From green screen to LED blue screen

LED blue screen: Star Wars Episode V (1980)

The 1980 film Star Wars Episode V - The Empire Strikes Back, won the Academy Award for Best Visual Effects. The film displayed how film compositing technology literally opened up a universe of possibility with the use of light.

The technology behind the LED blue screen involves the separation of a narrow slice of blue light from the full spectrum of visible color. In the filming of Star Wars, LED blue screens were used at an energy wave of 500 nanometers; a point at which blue light turns completely clear.

Composing red, green, blue separations, with matts, cover matts, and intermatts using bi-packing techniques on every starship and fighter plane, the film came to life, with Luke Skywalker the fighter pilot leading the charge.

Why does a green screen have to be green?

Green and blue are the colors most commonly used for chroma screen compositing. First and foremost, this is because they are the colors farthest from human skin tone. Otherwise, any color could be used for a transparent effect.

The greatest limitation of green/blue screen technology is lighting. When lighting is not perfectly balanced between two composite images, the image looks odd to the audience, and distracts greatly from the believability of the composite moving image.

Is the green screen now obsolete?

Once exclusively a tool for weathercasters and cinematographers today, digital green screen technology is now used every day by people video conferencing with Zoom.

It's a playful technology for a 12-year-old, adding a digitally rendered green screen around her body during a school Zoom call—a quick and easy way for her to hide her messy bedroom from her teacher and peers during a math class.

The digital version of green screen technology is now so pervasive, it’s used every day for everyday scenarios, made even more popular as video calls increased during Covid-19 pandemic lockdowns.

Technology is moving at an accelerated pace. Effects that seem magical and amazing today are likely to become integrated to use in regular everyday routines in the future.

Perhaps we will represent ourselves as a virtual human, or place ourselves in a realistic-looking amazon jungle during an exercise routine.

And it all began with a green screen and a camera...

Stay tuned for part II: from Green screens to LED projection, and real-time compositing.

Green screens are a great way to create an interesting and engaging video. They can be used in a number of ways, from creating a simple background or adding text and graphics.

By using green screens, you can make your videos more dynamic and eye-catching, which can help you stand out from the competition. Have you tried using green screens in your own videos?

If you want to take your video production skills to the next level, consider using a green screen.

Our team at Neweb labs can help you create any kind of special effect you can imagine.

Contact us today to get started!

Enable virtual production through real-time camera tracking.

Montreal - January 18, 2021 - Award-winning mixed-reality production studio Neweb Labs is excited to be partnering with Ncam, the leading developer of real-time tracking technology for films, broadcasts, and live events. As production partner and Canadian representative, Neweb Labs will be adding Ncam’s multi-award winning and patented technology to its arsenal when working with its clients.

Used on everything from Star Wars to the Super Bowl, Ncam Reality lets studios visualize real-time CGI, XR, and set extensions directly in-camera, making it easier to explore the world of virtual production. Ncam’s technology offers unmatched flexibility in the tracking world, as it allows productions to shoot inside and out with a lightweight setup that works seamlessly with industry-standard tools like Unreal Engine.

“Ncam’s camera tracking solution is the gold standard.” Yves St-Gelais, Founder Neweb Labs. “They offer a massive leap forward in camera tracking capabilities with unrivalled accuracy. This technology implies more flexibility to track and follow anywhere with any camera, and allow for complete freedom of movement without the need to set up markers or previous surveys.”

Neweb Labs is one of the few studios in Canada to provide a turnkey virtual production offering, empowering content creators to shoot visual effects in-camera and allowing them to make the best creative decisions in real-time. This new partnership is the result of long lasting work Neweb Labs has been leading to bring the most advanced technologies into its virtual production environments. This milestone will enable the company to refine its virtual production pipeline to better serve TV, Cinema and Live event production industries.

“We made Ncam Reality with flexibility in mind, so studios and their clients didn’t feel bogged down by the technology,” said Phil Ventre, VP Sports and Broadcast at Ncam. “It sets up in minutes, remembers exactly what goes in and out of the frame so you don’t have to keep calibrating it, and makes it easy to blend photoreal graphics and live footage. Exactly what people need to really jump into virtual production. It’s wonderful to have such a great partner in Canada; we are excited to see all the cool projects Neweb Labs bring to life in the New Year!”

About Ncam

Ncam are the creators of Ncam Reality, the most advanced real-time camera tracker in the world. From Star Wars to the Super Bowl, Ncam Reality is used throughout the broadcast, film and live events industries by some of the biggest brands in the world to visualize photorealistic graphics in real-time. Customers include Amazon, CNN, Disney, ESPN, Netflix, the NFL and Sky TV.

ncam-tech.com

About Neweb Labs

Neweb Labs is a mixed-reality and virtual production studio based in Montreal, Canada. Award-winning studio for its 3d characters produced and animated for tv series, Neweb Labs has developed solid expertise in real-time motion capture, 3D rendering, and mixed reality experience including holographic experiences, life-size virtual humans, and volumetric content. Newel Labs markets two proprietary solutions: Maya Kodes™ a virtual Vocaloid human and Zamigo™ a conversational AI avatar and is the first studio in Montreal to offer a full-service, turnkey virtual production solution.

Virtual Production is here to revolutionize filmmaking

Virtual production has opened up a pandora’s box of new and exciting opportunities that stretch the limits of imagination in entertainment. It cuts costs. It eliminates the need for location-based shooting. It reduces post-production times. No umbrellas required!

There is no doubt: the film community is excited. Using a virtual simulated environment brings a story to the screen faster. Film sets can be pre-created and altered on the fly. Actors can interact with large-screen visualizations of virtual sets in a studio, and changes to production can be made in real time.

The technology is now advanced enough to bring virtual and physical worlds together seamlessly; not only on screen, but also during filming.

According to GrandView Research, in 2019 The global virtual production market size (Hardware, Software, Services) was valued at USD 1,260.5 million. In their recent report, they predict it will have a compound annual growth rate (CAGR) of 15.8% from 2020 to 2027.

Virtual production, not well understood

There’s no doubt that virtual production is a gamechanger. Still, filmmakers struggle to embrace the change. Many find the technology mysterious and technical. Neweb Labs CEO Yves St-Gelais sees the confused look on the faces of seasoned film professionals as he explains what virtual production is about. “There are a lot of producers that want to get on this train—there’s a lot of buzz in Hollywood, in the industry,” St-Gelais says. “But few people in cinema really understand what virtual production is about.”

St-Gelais says every director of photography (DOP) will tell you the potential for virtual production is mind-blowing. “But they may not have a clue how LED screens work. They are used to working with surface lighting. There’s a learning curve, and it needs to be overcome.”

Shying away is not the answer. “We are in the midst of a technology revolution in film and television, opening new realms of possibility,” he says. “There’s no need to fear it. Embrace it.”

There are many big benefits to bringing virtual production to a set and all of them are revolutionizing the film industry. Here’s what filmmakers need to know:

1 - Previsualization and control over action in real-time leads to stronger storytelling

Virtual production allows filmmakers to create sets before starting shooting and to adjust on-the-fly during shooting, improving on scene and story. This allows producers and directors to control the narrative. They can change the scenery or details instantly at any time in the production pipeline. A recent report by Forrester Consulting commissioned by Epic Games revealed that previsualization of scenes and the ability to integrate special effects into productions were the top reasons for adopting the new technology.

In the shooting of The Jungle Book, Jon Favreau used simulcam technology to pre-visualize scale and actor positioning. This was especially useful when filming scenes when Baloo, the computer-generated 8-foot bear was on-scene with Mowgli, played by a live actor. The technology reduced post-production time and helped bring the relationship between the characters to life.

Mandalorian Director of Production Barry Baz Idione wonders over the new options that virtual production provides. “The ability to shoot a 10-hour dawn is extraordinary,” he explains. During filming, actors see visuals on virtual sets projected around them, and special effects are added in-camera. In a behind-the-scenes explainer, Baz said that actors on the Madalorian are more engaged when there’s a virtual set. They see the objects they are pointing at, and know where the road ends.

2 - What you see is what you get

Filmmakers can now review the shot immediately. No more wondering. No more waiting. This saves an inordinate amount of time in post-production.

“Our latest developments enable final pixel quality in real-time, which empowers content creators across all stages of production, and equips them to shoot their visual effects in-camera. It’s a game changer." – Marc Petit, general manager at Epic Games

3 - Virtual production offers greater creative possibility

When a virtual set is built using a 3D gaming engine such as the Unreal Engine, the possibilities are limited only by imagination. Change the glow of the sunset— or remove the sun altogether. Make a mountain a little higher on the horizon. Make it breathe. Virtual production offers real-time adjustment to scenery instantly, while you continue shooting your film.

4 - Virtual production substantially reduces costs

For big productions, reshoots can account for 5-20% of production costs. Virtual sets save on travel, transport, location and reduce risk. Post-production compositing and rotoscoping are also minimalized. Any assets created in virtual production can be reused in sequels, new episodes and subsequent seasons, further reducing cost.

As productions are delayed due to Covid-19 pandemic lock-downs and restrictions, experts say that now is the time for the entertainment industry to move to virtual production. A recent Deloitte report, The future of content creation: Virtual production – Unlocking creative vision and business value concluded, “Visualization, motion capture, hybrid camera, and LED live-action are virtual production techniques that belong to the toolset of modern content creation… As the global media and entertainment industry is forced to pause production due to COVID-19, now may be an opportune time to set your organization on a path to innovate the way stories are told.”

The time for filmmakers to embrace virtual production is now. (Rolling! With a virtual sunset providing a stunning backdrop on a blue planet…)

This is a train you don’t want to miss.

Montréal - November 16th, 2020 - The industry leader in virtual studio production Zero Density and award-winning mixed reality production studio Neweb Labs announced a strategic partnership to push the boundaries of virtual production in Canada.

With the Virtual Production transforming the art of filmmaking, Neweb Labs is thrilled to unveil Neweb Labs studio will be Zero Density's first North American Strategic Solution Partner. Neweb Labs will be using Zero Density's platform in its virtual set turnkey solution combining Unreal and Ncam’s capacity to deliver a fast-running virtual production pipeline.

“It is important to align with strategic partners that will put you in a position to provide the best solutions for your clients. As such, we are excited to work with Zero Density to bring our clients’ visions to life!” - Yves St-Gelais, Founder, Neweb Labs.

----

About Neweb Labs

Neweb Labs is a mixed-reality and virtual production studio based in Montreal, Canada. Award-winning studio for its 3d characters produced and animated for tv series, Neweb Labs has developed solid expertise in real-time motion capture, 3D rendering, and mixed reality experience including holographic experiences, life-size virtual humans, and volumetric content. Newel Labs markets two proprietary solutions: MayaKodes™ a virtual Vocaloid human and Zamigo™ a conversational AI avatar and is the first studio in Montreal to offer a full-service, turnkey virtual production solution.

At Neweb Labs, we bring together the best talents and expertise to create moments of wonder. We explore interactive storytelling and volumetric content creating compelling mixed reality experiences, whether it’s at a live event, flagship store, or across amusement park locations.

About Zero Density

Zero Density is an international technology company dedicated to develop creative products for industries such as broadcasting, live events, and e-sports. Zero Density offers the next level of production with real-time visual effects. It provides Unreal Engine native platform, Reality Engine®, with an advanced real-time broadcast compositing system and its proprietary keying technology, Reality Keyer®.

Few follow the world of virtual influencers as closely as Christopher Travers, Founder of VirtualHumans.org. He keeps close tabs on the emergence of virtual influencers; observing them, learning from them, commenting on them. He comes from a long history of building social media platforms, creating impactful growth campaigns, and finding innovative solutions for real-life problems. He has worked with companies such as Spotify, Leadtime, and Atlanta Ventures, and co-founded several start-ups. He comes to the market with a rare and profound understanding of what virtual humanity is all about.

Recently our business development director, Frederic MacDonald, caught up with Christopher, and asked him to share some of his wisdom.

He not only gave us some insight into where this market is headed, but also what brands need to think about in order to create a virtual influencer.

Here they are, in conversation:

Frederic - You’ve talked about the virtual influencer market as a “blue ocean” space. How quickly do you think this space will evolve? When will it be too late to get a foot in?

Christopher - Blue ocean strategy refers to the pursuit of a market with no competition, created by a player lowering costs and differentiating the offering to maximize value. Brands are the benefactors of companies leveraging blue ocean strategy to service them, so regardless of the state of the blue oceans available to companies building within the virtual influencer industry, brands will always enjoy the presence of virtual influencers. As the industry evolves, these virtual influencers will become increasingly normalized as a go-to medium for brands to build close relationships with young, entertainment-obsessed audiences. In the same way other content mediums experienced unknown beginnings and unique challenges of industry infancy, such as MMS, vertical video, podcasting, and more, the virtual influencer industry will eventually catch a mainstream stride and become a widely-adopted content medium for brands and individuals alike.

Frederic - What are the most important traits of a virtual influencer? For an authentic experience, do they need to resemble a real human?

Christopher -There's no single most important trait to a virtual influencer. To create a successful virtual influencer, the creator must champion demographic targeting, character definition, design, storytelling, content capture, pipeline management, people management, content amplification, community management, and more depending on the role they give the virtual influencer. In many ways, the launch of a virtual influencer greatly resembles the launch of a startup. One trait that makes or breaks most virtual influencers, though, is their ability to build and retain a meaningful audience.

Frederic - What should brands consider before launching a virtual influencer to represent them?

Christopher - Considerations all depend on a brand's specific needs, as the needs of a music label, for example, would vary heavily from the needs of a major consumer goods brand when it comes to virtual influencers. If I had to choose a specific point of advice for brands considering launching a virtual influencer to represent them, though, it would be to work with a company who knows how to build that virtual influencer's audience, as mentioned. What purpose does a branded virtual influencer with little-to-no followers serve? Media is given life when consumed.

Frederic - From your observation, what works? What doesn’t? Can you provide an example of each?

Christopher - Every virtual influencer's success can be accounted for through research. If I had to pick one, though, I would like to know why Thalasya has half a million followers—there's a minimal sense in the popularity of that virtual influencer; though I would ultimately chalk her growth up to PR. There's this outstanding story about a well-executed, highly-promising virtual influencer by the name of Kei Wells who failed due to the founding team unraveling, which you can read about in our coverage about what happened to virtual influencer Kei Wells.

I believe every team within the virtual influencer industry will experience greater net success through collaboration rather than competition, which is why I built VirtualHumans.org—to empower all the creators in this space and build valuable inroads for this growing industry. The virtual influencer industry is not a zero-sum game, and there's room for plenty more "competition" who will ultimately make the industry better for all of us.

Reach out to christopher@virtualhumans.org

Virtual humans* can work around the clock: 24 hours a day, 7 days a week. They never get sick, never grow old, and never feel elated or depressed about how many followers they have on Instagram. They can take risks that real humans cannot.

Brands have a lot to benefit from them.

It has become clear that virtual humans are no longer just a trend - they are gaining popularity, momentum, and are growing in numbers.

At the beginning of 2020, nine virtual influencers had more than a million followers, according to Hyprsense. The list includes Lil Miquela, Momo, Kaguya Luna, and Colonel Sanders, created by KFC.

Hyprsense research reported that just 112 virtual influencers in 2019 grew to 170 in early 2020, and the growth continues, as new virtual influencers go live every day.

The big rise of Lil Miquela

Lil Miquela, created by Brud, has become a virtual human sensation.

Her creator, Brud, was recently awarded $6 million in investment funding.

Lil Miquela and her friends are represented by PR firm Huxley and have received ~$30M in investment at a $125M valuation from Spark Capital, Sequoia Capital, M Ventures, BoxGroup, Chris Williams, Founders Fund, and WME.

Lil Miquela also got a mention in MarketingHub’s State of the Influencer Marketing 2020 Benchmark report for her incredible reach across social networks. Compared to a .7% average engagement rate average, she clocked an impressive 2.7% engagement rate. That’s at par with Kim Kardashian, with 2.76%.

When you compare that with Justin Bieber (4.9%) and Beyonce (1.89%), Lil Miquela is doing very well among her celebrity peers. (Celebrity engagement numbers via Pilotfishmedia.com.)

Brands are taking advantage.

It has become clear that partnering with a virtual influencer is a smart way to reach an audience. Last year, Calvin Klein brought Lil Miquela into their ad campaign “Get Real” with Bella Hadid, drawing over 400,000 views. She has also done promotion for major brands 7-Eleven, and Taki’s.

Lil Miquela refers to her fans as “Miqueleans.”

She’s not the only virtual human bringing brands to fans. This summer, Samsung partnered with Shudu, a virtual model created by British photographer Cameron-James Wilson, to launch its new Galaxy Z flip phone.

The Instagram caption read “the fusion of beauty and technology in this beautiful device is iconic and represents everything Shadu stands for.”

Virtual life in a real world

This summer, IKEA partnered with Aww, bringing their virtual influencer Imma to Japan during the lock-down. She is socially isolated in an IKEA furnished apartment.

A “reality camera” displayed her life real-time on a big screen in a busy Tokyo shopping district, watching her cook, vacuum, do yoga, and post to Instagram when her dog ate her socks.

Real people observed her, even photographed her, living a virtual life in a real environment.

Blurring the line between real and virtual

It is becoming increasingly clear that anything is possible when the virtual and real world become intertwined.

By creating and engaging with virtual humans, brands are gaining influence. By using this novel form of interaction with customers, brands are not only attracting curious fans but also defining a new way of engaging audiences. When well-executed, virtual humans are sexy, smart, creative, and evocative. They can be whatever a brand wants them to be.

Their success does not depend on a charismatic fallible human, but rather on a well-researched plan, with a creative personality backed by measurable data. New technology makes it possible to speak with virtual humans in real-time. Their facial expressions, reactions, and movements are highly convincing.

The 3D technology is sophisticated and believable, matching the expressions of a real human, and evoking the same compassion, empathy and feeling that one might feel with a real human.

Wondering what it takes to create your own virtual human influencer? We are discovering it’s just as much a marketing exercise as it is a creative one.

Read our recent conversation with Christopher Travers, the founder of Virtualinfluencers.org. We explore what works and what doesn’t, and what you need to think about to create a digital personality with influence.

...because the world of virtual humans is getting real...

_

Our definition

*A virtual human is a digital character created with computer graphics software given a personality defined by a first-person view of the world, made accessible on media platforms for the sake of influence.

You may not have heard of Maya Kodes, but over the past year, the slender blond singer has released a dance pop song on iTunes, recorded an EP set for release in June, performed 30 concerts and amassed 5,500 Facebook followers.

Pretty impressive for a hologram.

Billed as the world’s first interactive real-time virtual pop star, Kodes is the creation of Montreal’s Neweb Labs.

In development for 18 months, she’s the brainchild of Yves St-Gelais, producer of the popular Radio-Canada TV series ICI Laflaque, and a former Cirque du Soleil comedian and director.

Kodes has already been road-tested at the company’s custom-designed holographic Prince Theatre, where she performed a fluffy dance-pop confection called “Boomerang” — the song currently for sale on iTunes — amid a flesh and blood dance crew and in front of a largely tween audience, kibitzing with the show’s emcee in a fromage-filled routine that bordered on juvenile.

It’s also the venue from where Kodes will present, on June 13, a 360-degree.

A Toronto performance is expected before the end of the year, says Neweb spokesperson Élodie Lorrain-Martin.

“We really want to do a world tour and also have her perform in multiple venues at the same time,” says Lorrain-Martin.

Since holograms have already performed — late rapper Tupac Shakur was resurrected at Coachella in 2012 as was the late Michael Jackson at the 2014 Billboard Music Awards — Neweb’s ambition is plausible.

Onstage, Kodes is a chameleon of special effects, changing costumes and skin pigmentation in the blink of an eye; shooting sparkles from her hands and instantly cloning herself into a Maya army.

But what makes Kodes stand apart from Shakur, Jackson and the 3D Japanese anime star Hatsune Miku — who has already toured Japan and North America as a singing hologram — is that she isn’t strictly a playback apparition.

“Every other holographic project on the market right now is all playback,” Lorrain-Martin says. “She’s the first one in the world to be able to interact in real time.”

How Kodes manages to do this involves a bit of smoke-and-mirrors: the onstage Maya is embodied by two people hidden from view.

The first, Erika Prevost, best known as Sloane on the Family Channel’s The Next Step, portrays the physical Maya, her choreographed movements relayed by motion capture cameras and a bank of five computers as she cavorts with onstage dancers. Prevost also provides Kodes’ speaking voice, keeping an eye on her audience through an onstage camera, which lets her gauge the crowd’s response and even indulge in a Q&A if desired.

Then there’s a woman who provides Kodes’ singing voice, whom Lorrain-Martin refuses to identify.

Kodes’ virtual existence even has a back story.

Copyright Toronto Star - By Nick Krewen Music

In some respects, the future of French-Canadian pop music resembles many present-day divas: blonde, slim, attractive. But there’s one very important difference between Maya Kodes and the Keshas of this world—Kodes is a hologram. And while she may not be the world’s first virtual pop star, she’s the only one so far who moves, sings and talks to people in real time.

Kodes is the brainchild of animator Yves St-Gelais and his Montreal-based start-up, Neweb Labs. She has a prototype of sorts in Miku Hatsune, a Japanese virtual pop star with a huge back catalog and a large and breathless following. But unlike Hatsune, whose performances are prerecorded and played like a DVD, Kodes delivers her pop routine live. So far, Kodes has performed around 30 times. Marketed toward tweens, each gig marries uncomplicated dance-pop with digital pyrotechnics, ranging from color-changing costumes to the dazzling electronic sparks that shoot out from her digital palms.

Like any musician, each performance is subtly different, and the product of much work behind the scenes. It’s just the nature of that work that’s a little different: Off-stage, dancer Elise Boileau provides the moves, which an army of designers, illustrators and computer programmers translate into digital gestures. Kodes’ songs are also performed live, though the identity of the human behind her voice is guarded under lock and key, to maintain the illusion. She can even answer questions from members of the audience, or respond to what’s going on around her.

Of course, she isn’t perfect. The animation recalls the video game The Sims, and even her “look” is fairly derivative of the most basic kind of Disney princess. But St-Gelais says this is just the beginning. Already, he’s adjusted the design in response to negative feedback from women about her pneumatic figure, which he characterized as “a man’s version of a woman.” Now, he envisages a future for Kodes with multiple characters, an updated aesthetic, and live performances taking place simultaneously all over the world. “That will bring another form of communication, of intimacy in which Maya Kodes will be the core element,” he told Quartz.

For now, the important thing may be building a flesh and blood fan base to support this virtual star. So far, Kodes has an EP, a backstory, and an effervescent Twitter presence—but she’s still awaiting the one key to success that can’t be conjured up online.

Copyright Quartz - Natasha Frost, Reporter

Inside the Montreal company engineering virtual-reality pop star Maya Kodes.

A trip to the South by Southwest festival in Austin, Tex., this month could mark the start of a mixed-reality phenomenon.

Near the turn of the millennium, Yves St-Gelais had a vision. A part of the founding team behind Montreal entertainment juggernaut Cirque du Soleil and a fledgling animation producer, St-Gelais conceived a mixed-reality future where celebrities were not fallible objects of fetish, but ubiquitous, geo-customizable and interactive avatars.

At the heart of this vision was a pop star – a force for good who would speak primarily through music, the world's lingua franca, as well as interact with her audience in their mother tongue, a feat he describes as "bringing art to the soulless." He would name her Maya Kodes and he envisioned a superhero's arc, with story beats similar to that of Spider-Man. Early demos proved promising but fruitless. Never mind the animation quality or the music, computers simply were not fast enough to create a viable, likeable, real-time reactive humanoid.

Two decades later, St-Gelais believes the tech has caught up with his vision.

Armed with a story and a songwriting team, 12-person production crew headed by an Emmy Award-winning animator, her own A&R man, a mountain of proprietary software and hardware, a touring holographic spectacle and two EPs of chart-ready pop songs available on iTunes and Spotify that bring to mind Sia and Lady Gaga, Kodes (pronounced "codes" as in computer, not "kode-es," like a Russian spy) is already the world's first interactive virtual singer. This month, starting with a trip to the South by Southwest festival in Austin, Tex., St-Gelais will set out to discover if Kodes can become its first mixed-reality phenomenon.

On a recent morning, a cheery crew of technicians, programmers and performers arrived at the office of Neweb Labs, which St-Gelais founded in 2015, to run a dress rehearsal for the touring show. A small ground-floor unit in a sprawling commercial park surrounded by body shops in Montreal's industrial Côte-Saint-Paul neighbourhood, the studio space – bifurcated into a test theatre and a work area – is hardly the slick cradle of innovation one might expect from such a company, but charmingly reflects the scrappy mentality of its occupants.

Trickling in, the team sipped coffee "borrowed" from an upstairs cafeteria while setting up their respective work stations. For the technology-inclined, this meant helming computers, assuring programs were booting and code was running smoothly, while others slipped into bodysuits strapped with ball-sensors or donned a head rig resembling the skull of an aardvark.

Gently prodded by Neweb's artistic director, Véronique Bossé, the group – all women save for a technical artist – exchanged knowing glances as the hum of processors grew louder. Before long, a blond humanoid appeared on multiple screens inside the studio and began shadowing a body-suited performer's routine.

"There she is," Bossé exclaimed, addressing the nearest screen. "Hello, Maya."

On the surface, a virtual pop star may seem tame compared to the recent holographic resurrections of legendary artists such as Tupac or Bob Marley. What sets Kodes apart is that she's being performed and rendered in real time.

Kodes's team performs this "soul insertion" through the synchronization of highly guarded proprietary software and a puppeteering duo: Adancer in a motion-capture rig controls her body, while a stationary singer handles her facial movements and voice; both have audience monitors allowing them to react and interact with whatever's happening in the theatre. This occasionally allows Kodes, who is projected onto a holographic screen stretched out across the stage, to slip out of human form and interact with her real and digital surroundings, allowing her to participate in elaborate, otherworldly stage routines – multiplying herself in a psychedelic haze, for example – then answer off-the-cuff audience questions.

At Neweb, Kodes is referred to as if she were actually in the room, not unlike when addressing Santa Claus around children. And, like Santa, she comes with her own convenient if slightly confusing back story: Created to combat the Y2K bug, a glitch turned her code to human. Greeted to the world with music, the new form henceforth documents its existential anthropological study via song.

Arriving at the tour dress rehearsal fresh from the Pollstar Live! convention in Los Angeles, St-Gelais is reservedly excited. In his late 50s, soft-featured with dark-rimmed glasses, he appears like a cross between Walt Disney and late-era Steve Jobs and speaks softly with a pronounced Québécois accent.

A veteran of the entertainment field, St-Gelais now splits his time between Neweb and its big sister, Vox Populi Productions, which has produced the Quebec political satire series ICI Laflaque for the past 15 years. It was working on Laflaque, which uses quick-turnaround 3-D animation to skewer politicians on a weekly basis, that convinced the budding producer that creating real-time, interactive animation was not only possible, but likely the future of entertainment.

In that sense, Maya Kodes is very much St-Gelais's Mickey Mouse, a likable emissary he can use to capture the imagination of a new generation and launch an empire of interactive holograms and AI bots. But first, he has to create his Steamboat Willie. To do this, he's bet big, putting his own money alongside that of private investors into Neweb, as well as receiving $1.2-million from the Canadian Media Fund to have Kodes and her team perform at industry showcases and 1,000-person-capacity venues in Switzerland, Sweden, Germany, Canada and the United States. The future, he says shortly after the rehearsal wraps, is interactive, global and beyond the capabilities of a single human. After all, there can only be one Britney Spears, Beyoncé or Taylor Swift, and each comes with their own very human problems. In contrast, multiple versions of Kodes can work 24/7 around the world without the fear of burnout or language issues.

"We had every big name in the Canadian music business pass through here. They loved Maya, but they said they didn't know what to do with her," St-Gelais explains. "The traditional music-industry model is just that: traditional. [Kodes] is fundamentally an attraction. The market model is not Warner, Sony or Universal, it's Cirque du Soleil."

If Neweb's mission appears out of movies, books or literature, it's because it more or less is. A 2013 episode of Black Mirror titled The Waldo Moment featured an interactive animated avatar running for Parliament; in the 2002 film S1M0NE, a successful director secretly creates an interactive character to star in his films; and, perhaps most famously, Dr. Frankenstein created a humanoid experiment in Mary Shelley's updated Prometheus myth. In all cases, the creations – often born of frustration and their creator's own existential dread – ended up turning on their makers, leaving them desolate or dead.

When I bring this up to St-Gelais, he laughs it off. Kodes is not an extension of himself as much as an evolution of a movement, he explains. The next generation of tech-savvy consumers will not only want to be entertained but also be part of their entertainment – whether through augmented, virtual or mixed reality.

And judging by market trends, St-Gelais has a point.

According to industry forecaster Digi-Capital, augmented reality and virtual reality combined will be a US$110-billion business within five years. And the entertainment sector, second only to gaming, has been a maven in the industry. Although headset technology is still too nascent to create a full-on tipping point, industry trends indicate music will be a leading way in for Generation Z, due to both its sensory immersion and short running time. But while some forward-thinking artists, such as Future Islands, Black Eyed Peas and Run the Jewels, have released VR experiences, for the most part, marquee pop stars have stayed out of the sphere – preferring to keep as much manicured control as possible.

"The technology is a factor, but so is the marketing," explains Jon Riera, one half of Combo Bravo, the Toronto-based creative team behind VR videos for A Tribe Called Red and Jazz Cartier. Riera argues that until the marketing budgets match those of traditional video and there's a centralized digital location where audiences know they can turn to to find music-based VR, the medium will always suffer from the "cheesy-wow" factor. However, he points out that at least one big-name, owl-loving Toronto artist has expressed an interest in working in the medium. "He just needs Apple to release its own VR headset."

Harold Price at Occupied VR, one of the leading companies working in the field in Canada, sympathizes with Riera's argument. We've already accepted the tools to this future – AI assistants and ARKit-enabled phones – into our home, he says. "What we need is a 'must-see' cultural moment. We need our Star Wars."

St-Gelais believes Kodes could be such a moment. "Look at Hatsune Miku," he says. "She plays to 200,000 people in Japan. And she is just a playback."

Miku, whose name literally translates to the voice of the future, is Kodes's most obvious predecessor, and much of the expectations of avatar-based musical success can be traced back to her effect on the industry. Invented as a marketing avatar in 2006, Miku is a "voicaloid" – meaning her voice is competently synthesized – who shot to fame in the late aughts, selling out stadiums in Japan, opening for Lady Gaga's 2014 North American tour and even appearing on The Late Show with David Letterman. As St-Gelais points out, Kodes, who currently has no comparative competition in the market, can not only do everything Miku can, but also be adapted for different geopolitical regions, while feeling just as organic and interactive in each one.

"For me, holography is not the last platform," St-Gelais says, revealing that plans are already in place for a weekly 30-minute talk show with live Q&A on Facebook. "The next step is connecting her to an artificial intelligence, so it's possible to have Maya on your phone or virtual reality."

First though, the Neweb team has to focus on bringing Kodes to the world "in person." The exercise almost already feels antiquated, but St-Gelais has been waiting nearly 20 years for the technology to be ready and he's not going to let this moment pass.

"We're on her ninth body," Bossé says, pointing out the speed in which the software develops, while mentioning there's already talk among the crew that the 10th will be a little more reflective of "real" women.

While the pomp and circumstance surrounding Kodes and Neweb's ambition is admirable, the question of her success still rests on finding a dedicated audience. And, for all the talk of connecting to international, tech-savvy youth waiting for tech to catch up to its demand, for me, Kodes's and Neweb's success lies squarely in its ability to appeal to children. Always the salesman, St-Gelais is keen to discuss the strong international reaction so far.

But it's when I ask him why he created Kodes that his mouth curves up and he affects a Disney glint. "I have children," he says in his Québécois accent. "I make her for my children."

© Copyright 2020 The Globe and Mail Inc. All rights reserved.

Ready to step into the future with a very different kind of performance or brand activation? Check out interactive holographic pop artist Maya Kodes, an innovative superstar from Neweb Labs.

Neweb Labs uses artificial intelligence, holography and motion capture technology to produce 3-D animations and virtual personalities like Maya. “Our augmented reality productions create a real emotional connection to audiences and deliver tremendous wow factor,” says Candace Steinberg, Neweb Lab’s marketing and sales director. “We leverage motion capture for real-time interaction.”

Maya Kodes is a holographic superstar created by Neweb Labs

Maya, hot on the heels of her Boomerang CD release, is an on-stage dynamo, entertaining with spectacular special effects, lively, in-the-moment banter, and choreographed sets alongside her human back-up dancers. Behind the scenes, it’s Neweb’s crew working her moves and on-point audience interaction via the body-motion capture suit and live voice audio that brings her to life. The crew works her moves and interacts with the audience to create an immersive experience. In addition to Maya, Neweb develops live interactive 3-D holograms, including living or deceased celebrities and public figures, and animated caricature-based characters, for any type of custom-branded event such as conferences, trade shows or product launches. The company also provides artificial intelligence technology that pairs a human interface with a holographic terminal or mobile app for virtual customer service greeters, tour guides, and more.

Maya has taken the world by storm. With her incredible voice and cutting-edge dance moves, she wows audiences everywhere she goes. In a future filled with endless possibilities, Maya Kodes is one star that shines bright. Some people say that holographic performers are the future of entertainment, and she is certainly leading the pack. She is sure to keep fans coming back for more, and her live shows are not to be missed! If you're lucky enough to see her in concert, you'll be blown away by her presence and stage presence. Maya is truly a one-of-a-kind performer, and we can't wait to see what she does next!

Click here to watch Maya Kodes.

Copyright Karen Orme TSEvents